Can you trust any video after today? …

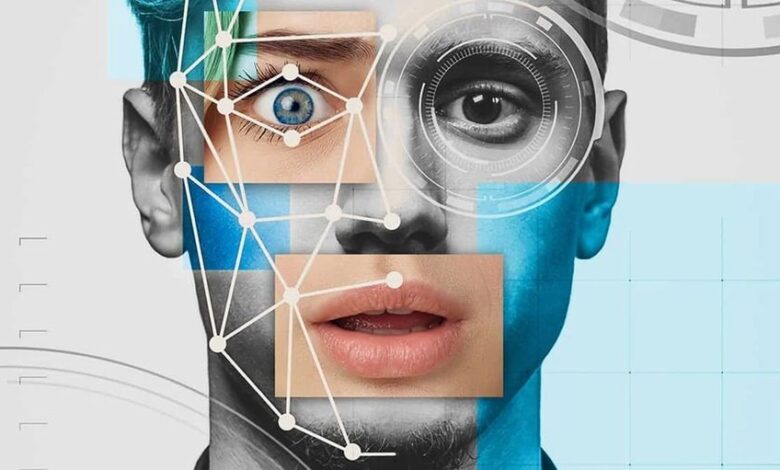

The internet is filled with “Deffex” content – it is the sound, images or videos made using artificial intelligence tools – as people say or do things they do not say or do not say, or change their appearance or their appearance.

Some are called “digital nudity” because images are changed to show someone who has no clothes. Other “deep counterfeit notes” are used to deceive consumers or to harm the reputation of politicians and other public people.

Artificial intelligence is easy to create “deep counterfeit notes” with only a few clicks on the keyboard. Governments are interested in facing the event, but according to data from the “Signigate” identification company, the war seems to have been lost over 20 times over the past three years.

What are the steps to fight “deep counterfeit”?

On May 19, US President Donald Trump signed the “Tag It Dowlot” law, which is guilty of non -unconnexable pornography, also known as “pornography” and forced social media institutions to eliminate these obvious sexual images.

Last year, the US Federal Communications Committee used artificial intelligence voices in illegal calls.

Two days later, the ban was issued by President Joe Biden’s “deep audio counterfeit” by the group’s “stop and stop” against the company responsible for the company. Residents of New Hamposher received an automatic call before the presidential preliminary elections in the state, in which the voice seemed to be the voice of Bidan, which they stayed in their homes and insisted that “votes for the November elections”.

The EU AI law compulses sites to place signs of the product of “deep fraud”. In 2023, China also used a similar law. On April 28, the British Government’s Child Affairs Commissioner invited a large -scale “digital naked” applications online.

Where did you report the “deep counterfeit” in the news?

Pictures of “Deep Kalnotin” Pop Star Taylor Swift spread widely on social media in January 2024, angry with his fans and pushed the White House to express his concern.

During the US presidential election in 2024, Elon Musk shared a campaign video using “deep counterfeiters”, in which Democratic candidate Kamala Harris’s artificial intelligence was not classified without being classified.

In the video, he seemed to describe president Joe Bidan as a “difference” and says, “He knows nothing about the country’s administration.” The video won tens of thousands of views. In response, California Governor Gavin Newsam promised to ban the digitally modified political “deep counterfeit” and signed legally in September.

How to create videos using “deep counterfeit”?

It is often prepared using an artificial intelligence method of training to identify patterns in the actual video recordings of a particular person, called “deep learning.”

It is impossible to replace an element of a video like the person’s face, and it appears to be a superficial mongery without another content. These changes are very mistaken when they use them with “sound cloning” techniques, which analyzes a person’s audio clip with the smallest audio areas that are reorganized to create new words that appear to be the original video speaker.

How did the “deep counterfeit” spread?

This technology is initially protecting academics and researchers. However, in 2017, the “Motherboard” site of the “Vice” website reported that a reddish called “Deep Fic” has created a way to create fake videos using an open source plate. “Redide” banned this user, but the procedure spread quickly. At its beginning, deep counterfeit technology requires real video and real audio performance and advanced editing skills.

Today, current obstetric intelligence systems allow users to create reliable images and videos with simple written orders. Listen to the computer to create a video that plays words in a person’s mouth, and it will definitely see you.

How can you know “deep fraud”?

This digital fraud has been very difficult to find, artificial intelligence companies use new tools in large -scale materials available on the Internet, ranging from youtube to stored photo and video libraries.

Sometimes, clear symptoms indicate that a picture or video created using artificial intelligence, such as an unprecedented banquet or a hand with six fingers. The color can be different in colors between the modified and modified areas of the image.

Sometimes, the mouth movement does not match the “deep fraud” clips. Artificial intelligence may have difficulty providing accurate details of components such as hair, mouth and shades, and the edges of the bodies may sometimes be difficult or have visible pixels.

But all of this may change with the progress of basic models.

What are the most important examples of using “deep counterfeit” technology?

In August 2023, Chinese advertisers released pictures of wildfire on the island of Hawaii, supporting the allegations that the fire is caused by a secret “air” armed test by the United States.

In May 2023, American stocks fell after a film on the Internet. Experts said that using artificial intelligence contains fake film production features.

In February of the same year, a fake audio clip appeared, in which Nigerian presidential candidate Atiko Abubakar seemed to lie to the elections in that month.

In 2021, a single -day video clip showed on social media showing Ukrainian President Volutmir Jelinski on social media, as if he was invited to surrender his soldiers and surrender to Russia. There are other harmful “deep” forms, such as football star Cristiano Ronaldo when singing Arabic poetry.

What are the dangerous dimensions associated with this technology?

There is fear in the “deep counterfeit” clips, and it is unlikely to distinguish what is true and fake. Imagine share prices by making fake videos of executives who make updates their companies related to their companies, or fake clips of war crimes.

One of the most vulnerable politicians, business leaders and celebrities has given a large number of records.

The technique of “deep fraud” also offers the so -called “pornography”, which is naked, even in the absence of the person’s real image or video, and women are often targeted. When the video spreads on the Internet, it is almost impossible to control it.

The report of the UK’s Child Affairs Commissioner, released in April, highlights the increase in children’s fears that they will fall victim to a slanderous “deep counterfeit content.

Additional worries can help spread awareness of the claim of people who actually appear in the record, and they are refusing or doing illegal things, because some have already begun to use “deep counterfeit” protection in front of the courts.

What other measures can be taken to reduce the spread of “deep counterfeit”?

It is not possible to change the type of automatic learning used in the production of “deep counterfeits” to find fake clips. But a small number of startups, such as the Sensit AI in the Netherlands and the “Sentinel” in Estonia, work techniques for detecting fake clips and many major technology companies in the United States.

Companies, including Microsoft, have promised to add digital watermarks to films producing using their artificial intelligence tools, distinguishing them as a fake content. Opanai, the company has created a way to find images produced with artificial intelligence, as well as a way to add watermarks to the books, but the last technology has not yet been provided, and to some extent, the Bloomberg website said.